Investigation in Isolation: myQ Garage Door

The next in my series of investigations into some of the various home automation devices I have installed. Today's post is the myQ garage door opener by LiftMaster. My family lives in a townhouse with a massive, and massively heavy garage door. After a few underpowered openers crashed and burned (including one that dropped the heavy door on our neighbor's minivan), we had a commercial-grade LiftMaster opener installed. It came with "myQ home automation technology" powered by Chamberlain.

The process for setting up the software was trivially easy. I installed the iPhone app (which also conveniently has an Apple Watch companion app), connected to the controller with the app and gave it my wifi information, and it has been rock solid ever since.

This "Investigation" series of posts sees me running packet captures on my local LAN on the regular; while I would've gotten around to writing about the myQ app eventually, what stood out to me while I was browsing some captures (diagnosing a separate networking issue) was that according to Wireshark there was "MQTT" traffic appearing on my network. This struck a nerve; one of networks I investigate regularly at work uses IBM MQ, so I've spent some time researching it professionally. MQTT is a lightweight variant of MQ, for "MQ Telemetry Transport" – specifically geared toward Internet of Things devices. Well this piqued my curiosity.

Basic Behavior

From what I can tell, the device will start by issuing a DNS query for connect1.myqdevice.com.

Interestingly, the DNS query is issued to 8.8.8.8; at one point in the past my DHCP server would issue 8.8.8.8 as the DNS server for my connected devices, but this hasn't been the case for a long, long time. Thus I suspect the LiftMaster/myQ may be hardcoded to use 8.8.8.8 as its DNS server. This is a bummer.

This connect1.myqdevice.com address is a CNAME record that will ultimately resolve to one of a bunch of load balanced servers living in Azure cloud. They all follow a pattern of connectN-east.myq-cloud.com or connectN-west.myq-cloud.com, where N ranges from one to six. (Twelve MQTT brokers running as cloud servers in Azure, handling all of the garage door openers?)

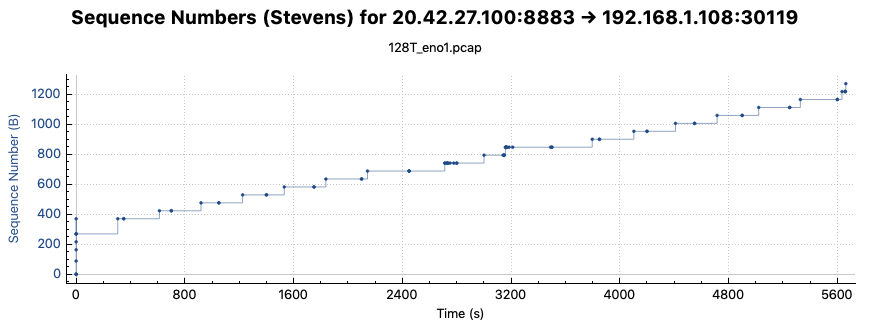

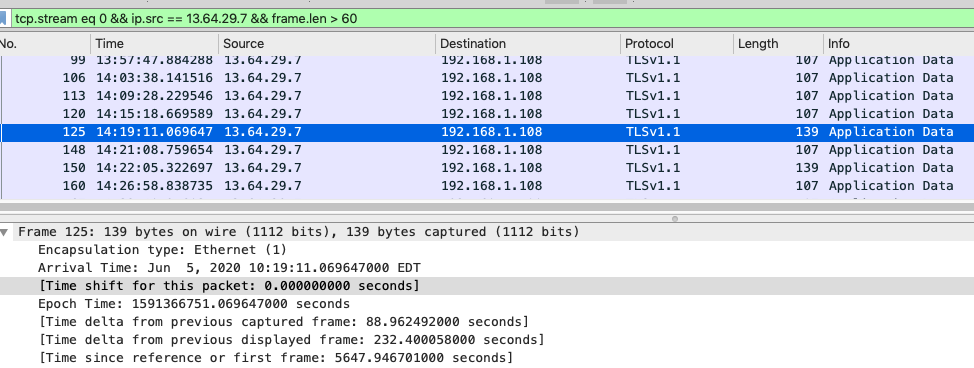

The controller will then connect via TLS 1.1 to TCP/8883 (MQTT) to the server. The two devices then each continuously send small (approximately 100 byte) packets to one another. The server sends its information down as 107 byte packets every 5:50. The controller has what appear to be two interleaved transactions being sent: a stream of 139 byte packets sent every 5:06, and a stream of 107 byte packets sent every 5:50.

In 24 hours of capture, I saw the myQ connect three times, to three different MQTT brokers. The first connection ended abruptly – no RST, FIN, or anything of the sort, strangely. The second connection ended when the server sent back an Encrypted Alert message to gracefully tear down the connection after approximately 90 minutes. The myQ then immediately sent another DNS query, received a new MQTT broker to connect to, and repeated its process. The third connection is still going strong as I type this.

The ups and downs

Yesterday was one of the rare occasions when I went into the office. As part of my experimentation, I used the myQ iPhone app (which is excellent, by the way) to lift the garage door instead of my typical mode of using the remote control. Looking now at the app history, I see that I opened the garage door at 10:19am. Sure enough, in my Wireshark trace I can see at 10:19am there was a signal sent from the cloud service (as a 139 byte packet, the first such packet seen from the cloud).

So there we have it: 107 byte packets sent regularly in both directions every six minutes or so, and 139 byte control packets sent to exchange notable events.

Securing the Service

My sample data set is pretty small, so I don't know what I don't know. There could be some weekly routine where the device reaches out to totally separate infrastructure to check for firmware updates, for example. As always, I'll show some 128T data modeling examples to show how I lock it down. This consists of two major components: identifying the garage door controller as a network tenant, and specifying the cloud servers as a service, then creating access controls to only allow the specific tenant access only to that service.

The myQ tenant and service

Simply put, I'll identify it by IP address. My DHCP server is a Raspberry Pi running the old trusty ISC dhcpd. I have a static assignment for the myQ to use 192.168.1.108. Thus my tenant definition looks like this:

*admin@labsystem1.fiedler (tenant[name=myq.iot])# show

name myq.iot

description "myQ garage door opener"

member beacon-lan

neighborhood beacon-lan

address 192.168.1.108/32

exitI use a top-level iot tenant for all of my home automation gadgets. This makes it easy for me to create service definitions for all of them in one fell swoop, but still give me the flexibility for creating specific services as we'll do next for the myQ:

*admin@labsystem1.fiedler (service[name=MYQ])# show

name MYQ

applies-to router

type router

router-name newton

exit

description "myQ Garage Door cloud service"

scope private

transport tcp

protocol tcp

port-range 8883

start-port 8883

end-port 8883

exit

exit

address connect1-east.myq-cloud.com

address connect2-east.myq-cloud.com

address connect3-east.myq-cloud.com

address connect4-east.myq-cloud.com

address connect5-east.myq-cloud.com

address connect6-east.myq-cloud.com

address connect1-west.myq-cloud.com

address connect2-west.myq-cloud.com

address connect3-west.myq-cloud.com

address connect4-west.myq-cloud.com

address connect5-west.myq-cloud.com

address connect6-west.myq-cloud.com

access-policy myq.iot

source myq.iot

permission allow

exitWhy did I spell out all of the various permutations of the cloud service? When configuring an FQDN as a service address on your 128T, it will do DNS resolution to learn which address(es) to install into its FIB. I didn't want my 128T to resolve connect1.mydevice.com to something different than the myQ did. Given that the myQ is using Google's resolver (for now...), my own local DNS cache isn't applicable. So I'm asking my 128T to resolve all 12 addresses and install FIB entries for all of the possible resolutions that may occur.

Here's the simple service-route that accompanies the service:

*admin@labsystem1.fiedler (service-route[name=rte_MYQ])# show

name rte_MYQ

service-name MYQ

next-hop labsystem2 fios

node-name labsystem2

interface fios

exitIt directs all of the traffic out through my Verizon FIOS connection.

Last, I'll create a session-type to identify and prioritize the MQTT traffic appropriately:

*admin@labsystem1.fiedler# show config candidate auth session-type MQTT

config

authority

session-type MQTT

name MQTT

description "MQ Telemetry Transport"

service-class OAM

timeout 360000

transport tcp

protocol tcp

port-range 8883

start-port 8883

end-port 8883

exit

port-range 1883

start-port 1883

end-port 1883

exit

exit

exit

exit

exitFixing the DNS problem

I don't like it when devices bypass my local DNS server. This configuration will "trap" the DNS activity from the device and force it to use my local server instead of Google:

*admin@labsystem1.fiedler# show config candidate authority service iot-dns

config

authority

service iot-dns

name iot-dns

applies-to router

type router

router-name newton

exit

scope private

transport udp

protocol udp

port-range 53

start-port 53

end-port 53

exit

exit

address 0.0.0.0/0

access-policy iot

source iot

exit

exit

exit

exitThis will match any and all traffic arriving on 53/UDP from any of my IOT devices.

*admin@labsystem1.fiedler# show config candidate auth router newton service-route rte_iot-dns

config

authority

router newton

name newton

service-route rte_iot-dns

name rte_iot-dns

service-name iot-dns

nat-target 169.254.127.127

exit

exit

exit

exitThis configuration forces that traffic to the Linux host (using kni254), where it will be responded to using the (fabulous) Pi-hole server I'm running on the platform in a Docker container.

Conclusion

I love the myQ, though it admittedly doesn't get very much use: 99.9% of the time my garage door opener behaves just like anyone else's garage door opener. But there have been two circumstances where it has proven its worth:

- We had some contractors over to do work in the garage, and I could make sure they came and went as agreed.

- I went out for a run, and by the time I got back my family had left and I was stuck outside. The Apple Watch app let me open the garage from my wrist.

I'll keep an eye on these security adjustments to see if there are any refinements that need to be made, and update this blog post accordingly.